Tagged “Programming”

Using ESPHome Without the Home Assistant Addon

The "blessed" flow for using ESPHome is the Home Assistant ESPHome Addon. This works well! It has a nice editor and it takes care of some housekeeping tasks for you. If you don't already have a comfortable development workflow it's a very nice way to start.

If you do already have a working style that doesn't involve a web UI and a browser editor you can still use ESPHome, you just have to handle those housekeeping tasks yourself.

Fundamentals

I think it can be helpful to step back and take a look at the fundamentals of a piece of software like ESPHome before diving headlong into the deep pool of non-standard workflows.

At it's base level ESPHome is a microcontroller firmware generator. That is, it reads your YAML config, generates a bunch of C++ files and config files, and then using a compiler and some helper programs it generates a binary program that your microcontroller (usually but not always an ESP32) can run.

ESPHome also has a few very useful helpers. First, it can do seamless over the air (OTA) updates once any ESPHome firmware has been installed on a device.

Second, it has a pretty powerful web-based UI and configuration editor.

Third, ESPHome ships with a "native" binary protocol it can use to talk to Home Assistant (previously) complete with Noise-based symmetric key encryption.

Lastly, it can be used as a Home Assistant addon, which as I said earlier takes care of a few things for you. The OTA update functionality requires a pre-shared key to validate that updates are coming from a known source. The addon takes care of generating that and the Noise secret and sharing these keys with Home Assistant so you don't have to care about them.

ESPHome on a Macbook?!

My ESPHome workflow doesn't involve the web UI or the addon at all.

Instead, I install ESPHome on my Macbook with homebrew and manage the OTA and HA secret keys with 1Password and a small helper script.

The script and all of my ESPHome configs live in this public GitHub repo.

This is the script as it exists today:

#!/bin/bash

set -x

set -eo pipefail

trap "rm common/device_base.yaml" EXIT

op inject --in-file common/device_base.yaml.in --out-file common/device_base.yaml

command=$1

shift

if [ $# -eq 0 ]; then

configs="*.yaml"

else

configs="$@"

fi

esphome $command $configs

All this is really doing is using 1Password's op inject tool to generate a file with my configured secrets, runs esphome, and makes sure to clean up the generated file with that trap line.

The top of device_base.yaml.in looks like this:

substitutions:

wifi_ssid: "op://keen.land secrets/ESPHome Secrets/ESPHOME_WIFI_SSID"

wifi_password: "op://keen.land secrets/ESPHome Secrets/ESPHOME_WIFI_PASSWORD"

fallback_ssid_password: "op://keen.land secrets/ESPHome Secrets/ESPHOME_FALLBACK_SSID_PASSWORD"

home_assistant_encryption_key: "op://keen.land secrets/ESPHome Secrets/ESPHOME_HOME_ASSISTANT_ENCRYPTION_KEY"

ota_password: "op://keen.land secrets/ESPHome Secrets/ESPHOME_OTA_PASSWORD"

All of those are just text entries in the ESPHome Secrets rich text item, but again they can be whatever you want.

If you decide to make them password type entries I believe you'd need to add --reveal to the op inject command, but I'm not 100% certain on that.

This differs in kind of a fundamental way from the way the web UI / addon work, in so far as the addon will create and manage unique OTA and HA keys for each device. My setup instead uses two keys shared among all of my devices. I don't see this as a significant risk because I don't use esphome devices in higher security contexts (i.e. my door locks are not running esphome), but your threat model is likely different than mine so you should make your own decisions. Nothing is stopping you from using unique keys for every device with this setup, you just have more secrets to manage in 1Password.

Workflow

My workflow looks like:

$ <edit whatever.yaml>

$ ./build.sh run whatever.yaml

# a bunch of compiler output and then logging from the device itself

$ git add whatever.yaml && git commit -m 'updates' && git push origin main

There aren't many hard edges with this setup.

You can put whatever you want into common and you can organize your devices however you want.

One exception is that secrets.yaml has some confusing implicit behavior, so I just commit an empty one and use a different file for my secrets.

Updating ESPHome is not something I do on a regular basis, but when I do it's basically just brew upgrade esphome && ./build.sh.

The process to add a new ESPHome device to Home Assistant is also fairly streamlined.

All you do is attach the ESP32 device to your computer with USB, create a new yaml file, and run ./build.sh run <your new file>.yaml.

ESPHome will pick up that there's a serially attached device without firmware and handle flashing the new firmware to it.

Once ESPHome is running on the new device it should show up in the Home Assistant integrations page and something that can be added. Clicking the accept button will open a config flow where you can paste your Home Assistant key, and then it should work just like any other device.

ESPHome Server in Python

Last year I installed holiday lights on my house (previously). At the time I chose a controller that had the following key qualities: a) cheap, b) worked with WLED, c) cheap.

After using it for a season I discovered that the reason why this controller is so cheap is because it uses an ESP8266, which is fine, but it doesn't play well with my setup. For example, if I enable the Home Assistant integration the controller falls over after a few hours. It also reboots for unknowable reasons sometimes and I would come home to find the lights in their default orange color.

I probably could have fixed this with a more powerful controller. I even bought a neat ESP32 controller with a built-in wide range voltage regulator but never got around to setting it up.

Loosely, what I want is:

- Control the lights without random reboots

- Easy Home Assistant integration

- Easy customization

- No Wi-Fi

- Use hardware that I already have

- Tailscale, ideally

- Learn some stuff

I could have gotten the first two using a more powerful ESP32 module. Third could be done with ESPHome. Four and five are contradictory while staying within the constraint of an ESP32-based system.

Also last year, I built a little box that controls the power for my 3D printer with a Raspberry Pi Pico and a second Klipper instance (previously) so naturally I tried to get Klipper to fit in this non-3d-printer shaped hole. I tried so hard.

On the surface, Klipper appears to do everything that I want (control addressable LEDs, kind of customizable) but it makes no compromises in wanting to be a 3D printer controller.

Most of the firmware is dedicated to running a motion controller, there's a lot of emphasis on scheduling things to happen in the near future, and there's a global printer object.

Importantly for my purposes, there's no built-in way to set up a digital input without declaring it a button.

It's fine. Klipper is fine. It's just not built to be a generic IO platform.

So, what's a reasonable rational person to do?

Write an ESPHome Protocol Server

Of course.

There are essentially three ways to get arbitrary devices and entities to show up automatically in Home Assistant.

First, one can write a Home Assistant integration. This is fine and good but it doesn't work for me because my devices are far away from the VM that Home Assistant runs in.

Second, there's MQTT autodiscovery. I know this works because it's how my Zigbee devices integrate with HA, but I just could not make any of the existing generic autodiscovery libraries work consistently. Usually I would end up with a bunch of duplicate MQTT devices and then HA would get confused.

Third, there's ESPHome. ESPHome is a firmware for ESP modules (think: small devices with wifi like plugs, air quality monitors, etc). ESPHome belongs to the Open Home Foundation, same as Home Assistant, so it has commercial support and a first class HA integration. I already have a bunch of ESPHome devices running in my house, so it seems like a pretty natural fit.

The normal and ordinary way of using ESPHome is to write some YAML config that ESPHome compiles into a firmware for your device, then you flash the device and HA sets itself up to interact with the entities you described in YAML. What I want to do is just that last bit, the part where I can tell HA what entities I have and it sets up UI for me.

HA talks to ESPHome over what they call their "native API". The native API is a TCP-based streaming protocol where the ESPHome device is the server and Home Assistant is the client. They exchange protocol buffer encoded messages over either plain TCP or with a Noise-based encryption scheme.

Over the last week or so I built a Python implementation of that protocol named aioesphomeserver, bootstrapping off of the official aioesphomeapi client library that HA uses.

A Minimal Example

Here's a very simple example of what aioesphomeserver looks like:

import asyncio

from aioesphomeserver import (

Device,

SwitchEntity,

BinarySensorEntity,

EntityListener,

)

class SwitchListener(EntityListener):

async def handle(self, key, message):

sensor = self.device.get_entity("test_binary_sensor")

if sensor != None:

await sensor.set_state(message.state)

device = Device(

name = "Test Device",

mac_address = "AC:BC:32:89:0E:C9",

)

device.add_entity(

BinarySensorEntity(

name = "Test Binary Sensor",

)

)

device.add_entity(

SwitchEntity(

name = "Test Switch",

)

)

device.add_entity(

SwitchListener(

name="_listener",

entity_id="test_switch"

)

)

asyncio.run(device.run())

From the top, we import a bunch of stuff and then create a class that listens for messages from the device (the handle method).

Then, we set up a device with a name and a fake MAC address. Device can generate a random one for you but it doesn't persist, so if you want this device to stick around in HA you should declare a static MAC.

We then add some entities to it: a binary sensor, a switch, and an instance of our switch listener configured for Test Switch.

Finally, we start the asyncio event loop.

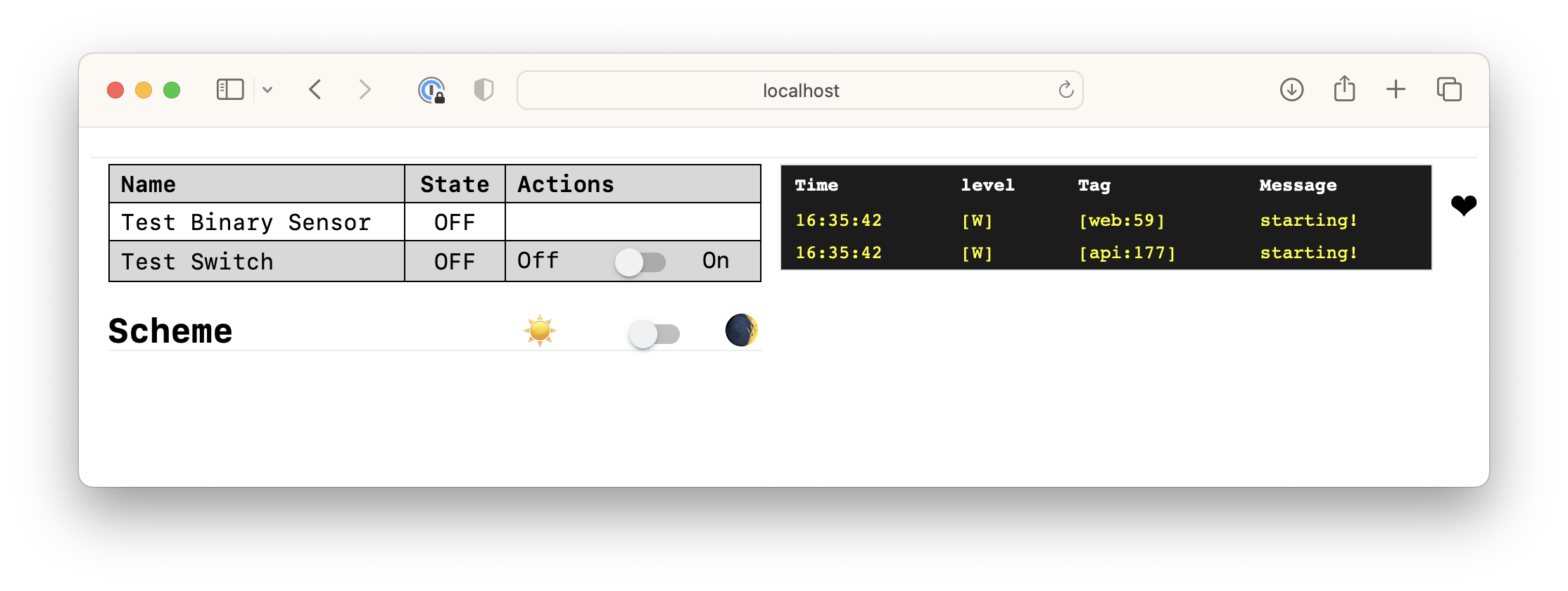

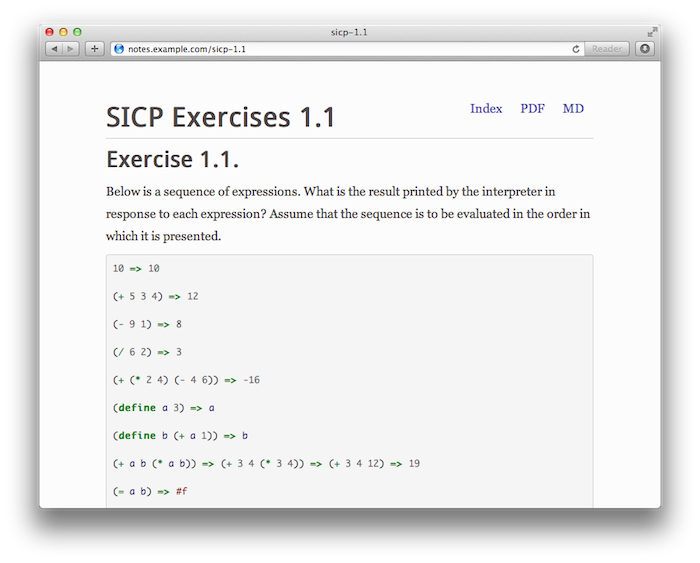

With just that, you get the ESPHome web UI:

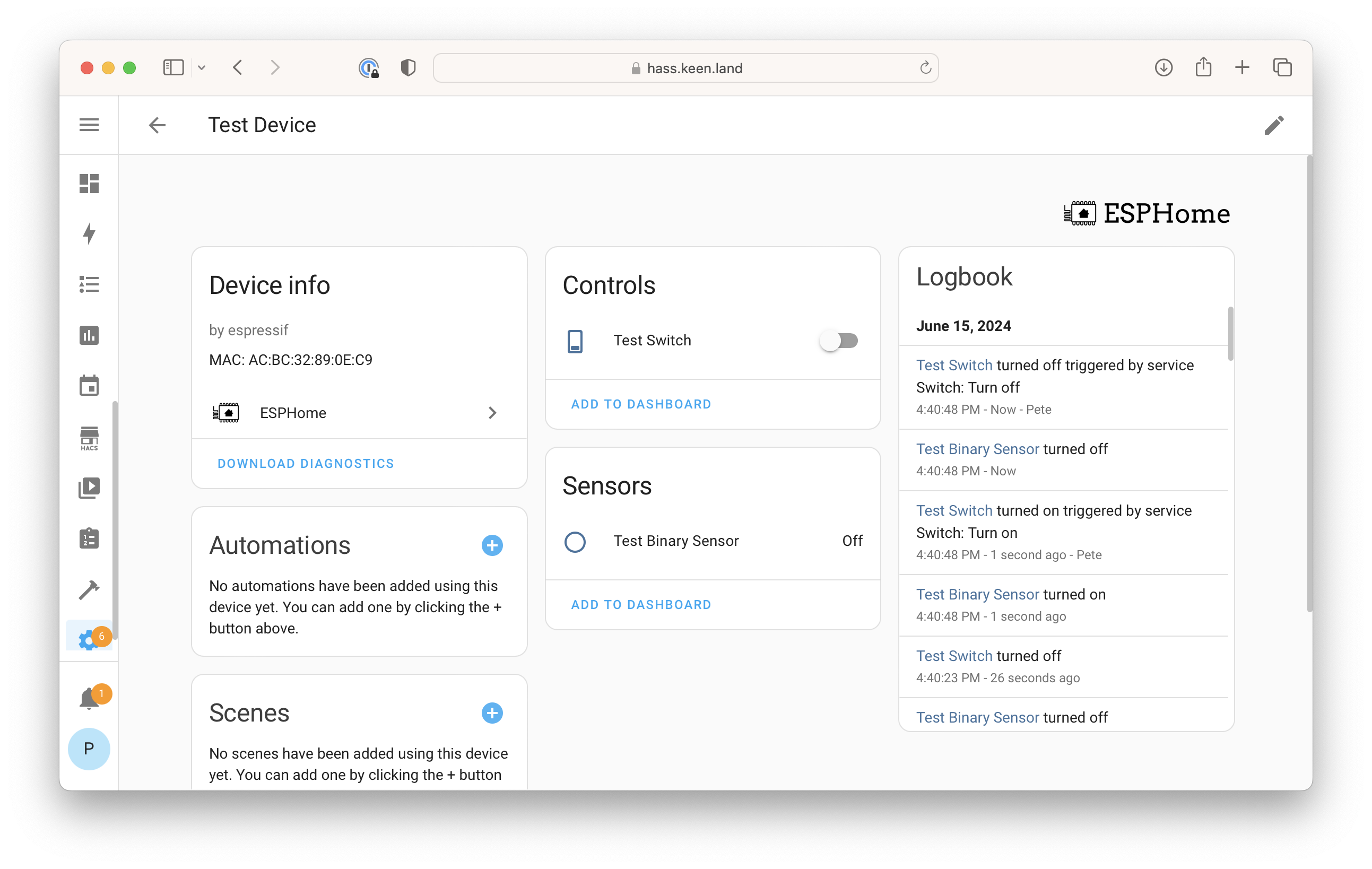

Adding the device to Home Assistant you'll see this:

AIO ESPHome Server Architecture

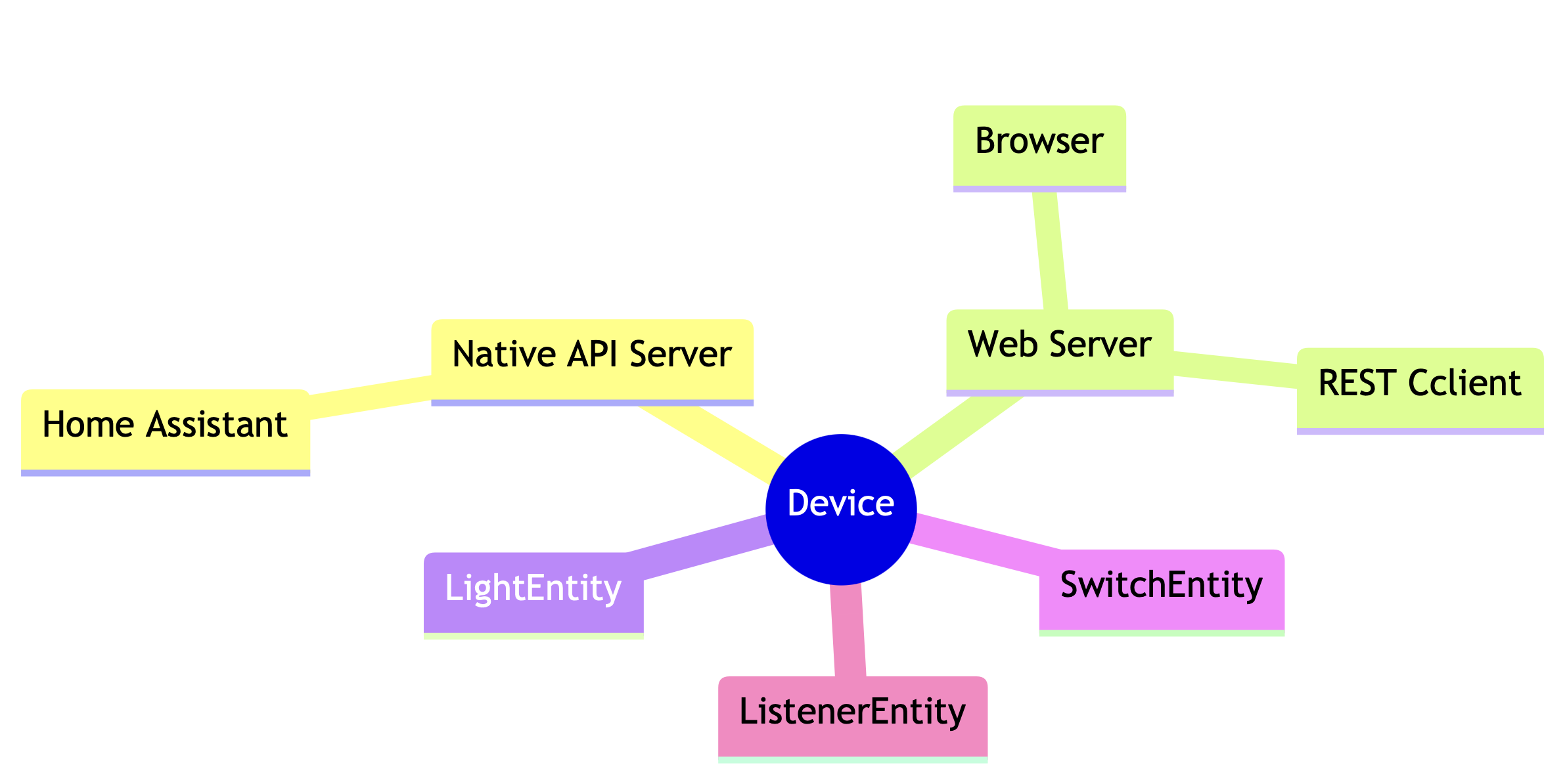

I tried to follow the spirit of ESPHome's architecture when writing the server.

The Device is a central registrar for Entitys and serves as a message hub.

The native API server and web server are entities that plug into the message bus, as are things like SwitchEntity and BinarySensorEntity.

Everything is async using Python's asyncio.

Any entity with a run method will automatically be scheduled as a task at startup.

A Production Example

The development case for this library has been driving the addressable LEDs on my house. I found a project named u2if that turns a Raspberry Pi Pico into a USB peripheral that provides a bunch of fun stuff: GPIO, I2C, SPI, PWM, ADC as well as an addressable LED driver for WS2812-compatible lights. The fun wrinkle of the light driver is that it offloads the bitstream generation to the Pico's PIO coprocessors.

I forked u2if and added a few things:

- RGBW support, which was already in the codebase but not available

- Support for the Pico clone boards I have (SparkFun Pro Micro RP2040)

- A set of effects for Neopixel along with a console-mode simulator to use while developing

- A Docker image that bundles the firmware and the Python library

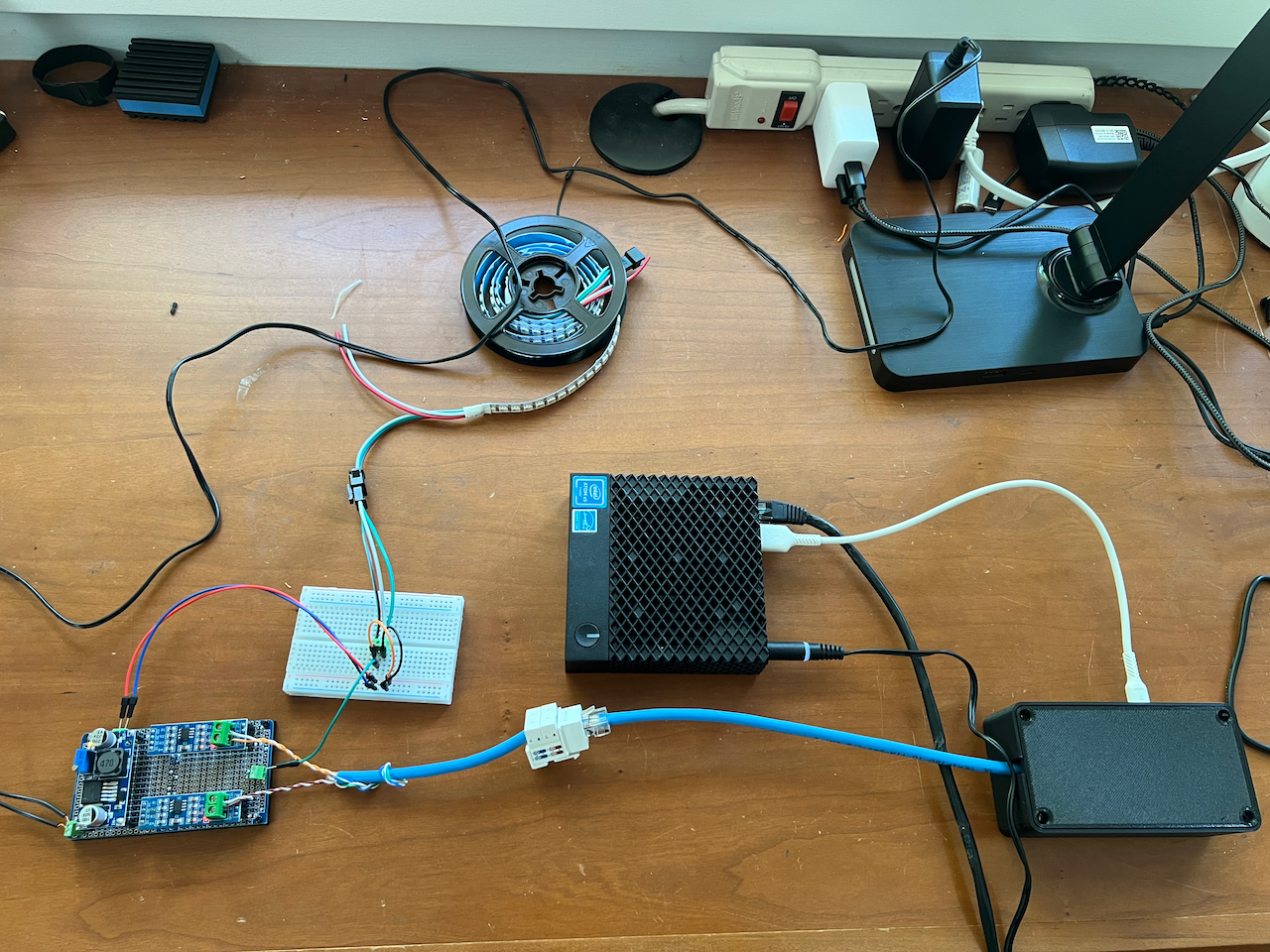

This deployment consists of:

- A Dell Wyse 3040 thin client running Alpine Linux that already handles Z-Wave for the garage

- SparkFun Pro Micro RP2040 running the u2if firmware connected over USB

- Two channels of RS485 transceivers so I can get the very fast, very unforgiving light control signals 40 feet from where the 3040 is mounted to the wall to where the light power injector lives.

Here is the full script that I'm using to drive the addressable lights on my house:

import asyncio

from machine import WS2812B

from neopixel.effects import StaticEffect, BlendEffect, TwinkleEffect

from aioesphomeserver import (

Device,

LightEntity,

LightStateResponse,

EntityListener,

)

from aioesphomeapi import LightColorCapability

class LightStrip(EntityListener):

def __init__(self, *args, strings=[], effects={}, **kwargs):

super().__init__(*args, **kwargs)

self.strings = strings

self.num_pixels = sum([s[1] for s in strings])

self.effects = effects

self.current_effect_name = None

self.current_effect = StaticEffect(count=self.num_pixels)

self.white_brightness = 0.0

self.color_brightness = 0.0

async def handle(self, key, message):

if type(message) != LightStateResponse:

return

await self.device.log(1, f"message.effect: '{message.effect}'")

if message.effect != "" and message.effect != self.current_effect_name:

if message.effect in self.effects:

self.current_effect_name = message.effect

self.current_effect = self.effects[message.effect](self.num_pixels, message)

self.current_effect.next_frame()

if self.current_effect:

self.current_effect.update(message)

self.color_brightness = message.color_brightness

self.white_brightness = message.brightness

if message.state == False:

self.color_brightness = 0.0

self.white_brightness = 0.0

def render(self):

pixels = []

for i in range(self.num_pixels):

color = self.current_effect.pixels[i]

pixel = [

int(color[0] * 255.0 * self.color_brightness),

int(color[1] * 255.0 * self.color_brightness),

int(color[2] * 255.0 * self.color_brightness),

int(color[3] * 255.0 * self.white_brightness),

]

pixels.append(pixel)

# partition strings

# write to each string

cur = 0

for string, length in self.strings:

last = cur + length - 1

string.write(pixels[cur:last])

cur = last + 1

async def run(self):

while True:

self.current_effect.next_frame()

self.render()

await asyncio.sleep(1/24.0)

device = Device(

name = "Garage Stuff",

mac_address = "7E:85:BA:7E:38:07",

model = "Garage Stuff"

)

device.add_entity(LightEntity(

name="Front Lights",

color_modes=[LightColorCapability.ON_OFF | LightColorCapability.BRIGHTNESS | LightColorCapability.RGB | LightColorCapability.WHITE],

effects=["Static", "Twinkle"],

))

def make_twinkle_effect(count, state):

return BlendEffect(

TwinkleEffect(count=count, include_white_channel=True),

StaticEffect(count=count, color=[state.red, state.green, state.blue, state.white], include_white_channel=True),

mode='lighten',

include_white_channel=True,

)

device.add_entity(LightStrip(

name = "_front_lights_strip",

entity_id = "front_lights",

strings = [(WS2812B(23, rgbw = True, color_order="GRBW"), 20)],

effects={

"Static": lambda count, state: StaticEffect(count=count, color=[state.red, state.green, state.blue, state.white], include_white_channel=True),

"Twinkle": make_twinkle_effect,

},

))

asyncio.run(device.run())

The structure is basically the same as the minimal example.

We import some stuff, we set up an EntityListener class, and then we set up a Device with a LightEntity and an instance of the listener .

In this case, the listener listens for state responses from a Light entity and renders pixels according to a set of effects.

It also has a run method that renders the current effect out every 1/24th of a second.

Should you use this?

I don't know!

If your constraints match mine, maybe it'd be helpful. If you want to expose a thing to Home Assistant and would rather have it show up as an ESPHome device rather than, say, writing your own HA integration or messing with MQTT or writing RESTful API handlers, this would probably be useful.

That said, I think if your use case fits within ESPHome proper you should use that. ESPHome has built in drivers for so many things and is going to be better supported (i.e. people are paid to work on it).

Pretty neat though, eh?

Using a Static JSON File in Home Assistant

Recently I found myself needing to bring some JSON from a file into a Home Assistant sensor. Specifically, the electricity rates for my power company are woefully out of date on OpenEI so I decided I could just maintain the data myself.

Home Assistant doesn't have a direct way to read JSON data from a file into a sensor. There's the File platform which has a promising name but is actually a trap. File is meant for use cases where something writes to, say, a CSV file continuously and you just want to read the most recent line. It specifically does not read the whole file.

After a lot of searching I came across the Command Line platform. The integration does a number of things, but for our purposes it lets you periodically run a command within the context of the Home Assistant container and bring the output back into Home Assistant as a sensor.

Using Que instead of Sidekiq

A project I've had on the back burner for quite awhile is my own little marketing automation tool. Not that existing tools like Drip or ConvertKit aren't adequate, of course. They do the job and do it well.

I enjoy owning my own infrastructure, however, and after Drip changed direction and raised prices I found myself without a home for my mailing list. I thought, why not now?

Using Let's Encrypt Without certbot

In my last post I talked about what a CDN is and why you might want one. To recap, my goal is automatic, magical DNS/SSL/caching management. Today we're going to talk about one aspect of this project: HTTPS and SSL.

SSL, or Secure Sockets Layer, is the mechanism web browsers use to secure and encrypt the connection between your computer and the server that is serving up the content you're looking for.

A few years ago browser vendors started getting very serious about wanting every website to be encrypted. At the time, SSL was expensive to implement because you needed to buy or pay to renew certificates at least once a year.

Almost simultaneously with this increased need for encryption, organizations including the Electronic Frontier Foundation and the Mozilla Foundation started a new certificate authority (organization that issues certificates) named Let's Encrypt. Let's Encrypt is different because it issues certificates for free with an API.

Most people use a tool named certbot that automates the process of acquiring certificates for a given website.

However, certbot doesn't really work for my purposes.

I want to centrally manage my certificates and copy them out to my CDN nodes on a regular basis, which means I need to use the DNS challenge type.

certbot's support for the DNS challenge isn't really adequate for my needs.

What is a CDN and why do I need one?

In my earlier post I talked about how I'm building my own content delivery network (CDN) but I didn't really go into what a content delivery network even is or why someone would want such a thing. A little back story is probably in order.

My Own Private CDN

Hosting my own CDN has long been a completely irrational goal of mine. Wouldn't it be neat, I'd think, if I could tweak every knob instead of relying on CloudFront to do the right thing? Recently I read this article by Janos Pasztor about how he built a tiny CDN for his website. This just proves to me that at least it's not an uncommon irrational thought.

Yesterday I decided to actually start building something. Even if it doesn't make it into production, I'll at least have learned something.

Why your SaaS application should support SAML

Your SaaS application should support SAML (Security Assertion Markup Language) if you're at all interested in big fat contracts from large enterprise customers. And why is that?

One word: money. Large enterprise customers pay quite a lot of money for services that help them do their work with a minimum of fuss. They want to do as little management of your service as they can possibly get away with, preferrably zero. If you can't make that happen, but your competitor can, guess who's not getting that big fat contract.

Stripe removed SSLv3 support. Here's how to fix the HTTP 401 errors.

On November 15th Stripe deprecated SSLv3 because of the POODLE vulnerability. On the whole, this has been a good and welcome change, because SSLv3 has been terrible for a very long time.

The problem is that on some systems this causes backend API requests to start failing with an error message from Stripe because they're unable to auto-negotiate TLSv1.2.

Payola v1.2: Now with Subscriptions

Today is release day for Payola v1.2.0 and the big watch word is subscriptions. So now that they're here, how do you use subscriptions with Payola? It's easy:

- Install the gem

- Configure a model

- Set up a form

- Profit!

Building Payola Extensions

A few weeks ago I introduced Payola, a drop-in Rails engine for setting up Stripe billing. Since that time, it's gained over 400 stars on GitHub and the gem has been downloaded almost 2000 times. The most requested feature, subscripton payments, is well on it's way to being completed.

Payola is more than just a checkout button. It has hooks at various points in the payment flow that let you take action and tie Payola into your application to do things like manipulate the sale object before the charge happens or override the low-level arguments that Payola sends to Stripe. It also has a rich set of notifications when payments complete, fail, or are refunded. In this post, we're going to build a simple extension that sends push notifications when someone buys a product.

Introducing Payola

I released an open source Rails engine named Payola that you can drop into any application to have robust, reliable self-hosted Stripe payments up and running with just a little bit of fuss.

Fix Your Email Deliverability with DMARC

If you do anything more advanced with email than hitting "Send" in Gmail then you should care about deliverability, which is the likelyhood that your email will end up in your intended recipient's inbox instead of their spam folder.

Command Line Faxing

When I started Okapi LLC, my little consultancy and publishing house, I had to fax in some forms to the State of Michigan. The entire system for opening businesses in Michigan, in fact, is basically a fax driven API. Being a modern, hip millenial I don't subscribe to a land line phone, nor do I own a fax machine. How was I supposed to fax things?

Enter Phaxio. They have a whole bunch of fax machines (actually they're probably banks of modems) in a data center somewhere and they let you use them with a simple HTTP API. All you have to do is go sign up and make an initial deposit. They'll provide you with an API key and secret pair that you can then use to send faxes using curl.

Start a VirtualBox VM at Boot on Mac OS X

Sometimes you have a VirtualBox VM that's critical to your workflow. For example, the Mac mini in my basement hosts a VM that does things like host all of my private Git repos and provide a staging environment for all of my wacky ideas.

When I have to reboot that Mac mini for any reason, inevitably I find myself trying to push changes to some git repo and forgetting that I have to start up the VM again by hand. And then there's the yelling and the drinking and it's no good for anyone.

Stripe Account Balances for Service Credits

Say you want to give a customer an account credit for some reason. They're an especially good customer, or your service was down for a few minutes and you want to give service credits, or some other reason. You can do this using Stripe's account_balance feature.

Using Stripe Checkout for Subscriptions

Stripe provides a gorgeous pre-built credit card form called Stripe Checkout. Checkout is mainly intended for one-off purchses like Dribbble or my book. Many people want to use it for their Stripe-powered subscription sites so in this article I'm going to present a good way of doing that.

Self-hosted Git Server

I've had a GitHub account since 2008. June 16th, to be exact. For almost six years I've been hosting my code on someone else's servers. It was sure convenient, and free, and I don't regret it one bit, but the time has come to move that vital service in-house.

I've run my own private git server on the Mac mini in my living room since 2012. For the last few years, then, my GitHub account has become more of a public portfolio and mirror of a selection of my private repos. As of today, my GitHub account is deprecated. If you want to see what I'm working on now you can go to my Projects page. I'll be gradually moving old projects over to this page, and new projects will show up there first.

Using the Mailchimp API for Sales

One of the very first things I did when I started working on the idea that eventually became Mastering Modern Payments was set up a Mailchimp mailing list. People would land on the teaser page and add themselves to the list so that when the book came out they would get a little note. After the book launch (with 30% of that initial list eventually buying) I started putting actual purchasers on the list.

The Life of a Stripe Charge

One of the most common issues that shows up in the #stripe IRC channel is people setting up their front-end Stripe Checkout integration and then expecting a charge to show up, which isn't really how Stripe works. In this post I'm going to walk through a one-off Stripe charge and hopefully illustrate how the whole process comes together.

A Practical Exercise in Web Scraping

Yesterday a friend of mine linked me to a fictional web serial that he was reading and enjoying, but could be enjoying more if it was available as a Kindle book. The author, as of yet, hasn't made one available and has asked that fan-made versions not be linked publicly. That said, it's a very long story and would be much easier to read using a dedicated reading app, so I built my own Kindle version to enjoy. This post is the story of how I built it.

Simple Git-backed Microsites

A few days ago I built a new tool I'm calling Sites. It builds on top of git-backed wikis powered by GitHub's Gollum system and lets me build and deploy microsites in the amount of time it takes me to create a CNAME.

Something that I've wanted for a very long time is a way to stand up new websites with little more than a CNAME and a few clicks. I've gone through a few rounds of trying to make that happen but nothing ever stuck. Furthest progressed was a Rails app exclusively hosting Comfortable Mexican Sofa, a simple CMS engine. I never ended up putting any sites on it, though.

GitHub's Pages are of course one of the best answers, but I'm sticking to my self-hosting, built-at-home guns.

Simulating a Market in Ruby

Trading markets of all kinds are in the news pretty much continuously. The flavor of the week is of course the Bitcoin markets but equity and bond markets are always in the background. Just today there is an article on Hacker News about why you shouldn't invest in the stock market. I've participated in markets in one way or another for about a decade now but I haven't really understood how they work at a base level. Yesterday I built a tiny market simulator to fix that.

Little Data: How do we query personal data?

My wife and I recently moved from Portland, OR to Ann Arbor, MI. Among the cacophony of change that is involved with a move like that, we of course changed to the local utility company. Browsing around in their billing application one day I came across a page that showed a daily graph of our energy usage, supposedly valid through yesterday for both gas and electric. And it has a button that spits out a CSV file of the date, which means if I actually wanted to I could build my own tool to analyze our usage.

Post-mortem of a Dead-on-Arrival SaaS Product

A little over a year ago I announced the launch of my latest (at the time) product named Marginalia. The idea was to be a sort of online journal. A cheaper, more programmer friendly alternative to Evernote. It never took off, despite my best intentions, and so a few months ago I told the only active user that I was going to shut it down, and today I finally took that sad action. This post is a short history of the project and a few lessons learned.

DRY your Rails CRUD with Simple Form and Inherited Resources

When you're writing a Rails application you usually end up with a lot of CRUD-only controllers and views just for managing models as an admin. Your user-facing views and controllers should of course have a lot of thought and care put into their design, but for admin stuff you just want to put data in the database as simply as possible. Rails of course gives you scaffolds, but that's quite a bit of duplicated code. Instead, you could use the one-two-three combination of Simple Form, Inherited Resources, and Rails' built-in template inheritance to DRY up most of the scaffolding while still preserving your ability to customize where appropriate. This lets you build your admin interface without having to resort to something heavy like Rails Admin or ActiveAdmin while also not having to build from scratch every time.

Essential Tools for Starting a Rails App in 2013

Over the past few years I've written a number of Rails applications. It's become my default "scratch an itch" tool for when I need to build an app quickly to do a task. Even though Rails is mostly batteries-included, there are a few tools that make writing new applications so much easier. This is my list of tools that I use for pretty much every new Rails project.

Edit: The discussion on Hacker News has some great gems that you should consider using as well.

Mastering Modern Payments Is Out Today!

I'm so proud to announce that Mastering Modern Payments: Using Stripe with Rails is officially launching this morning. Mastering Modern Payments is your guide to integrating Stripe with your Rails application and is packed with sample code and best practices that will make sure your integration works now and in the future.

I'm so proud to announce that Mastering Modern Payments: Using Stripe with Rails is officially launching this morning. Mastering Modern Payments is your guide to integrating Stripe with your Rails application and is packed with sample code and best practices that will make sure your integration works now and in the future.

Announcing: Mastering Modern Payments: Using Stripe with Rails

Over the past few years I've put together a number of projects that use Stripe and their Ruby API to collect payments and manage subscriptions. I've learned quite a bit about how to effectively use the things that Stripe provides to my best advantage. Two months ago I decided that I would like to share that knowledge and so I started working on a guide to integrating Stripe with Rails and today I'd like to announce that Mastering Modern Payments: Using Stripe with Rails will be available on August 15th, 2013.

Shipping with Stripe and EasyPost

Let's say that instead of running a Software as a Service, you're actually building and shipping physical products. Let's say quadcopter kits. People come to your website, buy a quadcopter kit, and then you build it and ship it to them. It takes you a few days to build the kit, though, and you would rather not charge the customer until you ship. Traditionally Stripe has been focused on paying for online services but recently they added the ability to authorize and capture payments in two steps. In this post we're going to explore billing with Stripe and shipping with EasyPost with separate charge and capture.

Page Viewer, a Simple Markdown Viewer

For various projects including Mastering Modern Payments I've found it really useful to be able to view the Markdown source rendered as HTML but I don't really care about editing it online. I put together a little gem named page_viewer which renders Markdown files like this:

Design for Failure: Processing Payments with a Background Worker

Processing payments correctly is hard. This is one of the biggest lessons I've learned while writing my various SaaS projects. Stripe does everything they can to make it easy, with quick start guides and great documentation. One thing they really don't cover in the docs is what to do if your connection with their API fails for some reason. Processing payments inside a web request is asking for trouble, and the solution is to run them using a background job.

Distributed Personal Wiki

For as long as I can remember I've been trying to find a good way to keep personal text notes. Recipes, notes, ideas, that kind of thing. Things that aren't really suited to blogging. Along the way I've used (and stuck with) PmWiki, DocuWiki, TiddlyWiki, and most recently I built my own sort-of-pseudo-wiki Marginalia.

Lately, though, it's been kind of a drag to use a web-based application just to write down some work notes. Having sort of an obsession with Markdown I decided to just start keeping notes in Markdown-formatted files in a directory. Of course, files that aren't backed up are likely to disappear at any moment, so I naturally stuck them in a git repository and pushed to my personal git server. But then, how do I deal with synching my work and home machines? I guess I'll manually merge changes...

Increasing the Encryption Noise Floor

Inspired by Tim Bray's recent post about encrypting his website, I decided to enable and force HTTPS for bugsplat.info. The process was straightforward and, turns out, completely free. Read on to find out how and why.

Full Text Search with Whistlepig

Yesterday I suddenly developed the intense need to add search to this site. Among the problems with this is that the site is kind of a weird hybrid between static and dynamic, and it has no database backend. If posts were stored in Postgres this would be a trivial matter, but they're just markdown files on disk. After flailing around for awhile I came across a library named Whistlepig which purported to do in-memory full text indexing with a full query language.

November 5, 2013: I've removed search because nobody used it and this way the site can be 100% static.

Deploy 12-Factor Apps with Capistrano::Buildpack

Last month I wrote a short article describing a method of deploying a 12-factor application application to your own hardware or VPS, outside of Heroku. Today I'm happy to announce a gem named capistrano-buildpack which packages up and formalizes this deployment method.

Docverter is now Open Source

A few months ago I created a hosted document conversion service named Docverter. The idea was to collect together the best document conversion tools I could find into one comprehensive service and sell access. Many of these tools are difficult to install if you're used to a service like Heroku, so it only made sense to wrap it all up.

Deploying a 12-Factor App with Capistrano

Deploying Heroku-style 12 factor applications outside of Heroku has been an issue for lots of people. I've written several different systems that scratch this particular itch, and in this post I'll be describing a version that deploys one particular app using a Heroku-style buildpack, Foreman, and launchd on Mac OS X via Capistrano.

Run Anything on Heroku with Custom Buildpacks

Heroku is a Platform as a Service running on top of Amazon Web Services where you can run web applications written using various frameworks and languages. One of the most distinguishing features of Heroku is the concept of Buildpacks, which are little bits of logic that let you influence Heroku as it builds your application. Buildpacks give you almost unlimited flexibility as to what you can do with Heroku's building blocks.

Hanging out in the #heroku irc channel, I sometimes see some confusion about what buildpacks are and how they work, and this article is my attempt to explain how they work and why they're cool.

Private Git Repositories with Gitolite and S3

Earlier this year I bought a new Mac mini for various reasons. One of the big ones was so I would have a place to stash private git repositories that I didn't want to host on 3rd party services like Github or Bitbucket. This post describes how I set up Gitolite and my own hook scripts, including how I mirror my git repos on S3 using JGit.

On-the-fly Markdown Conversion to PDF and Docx

Today I added PDF, Docx, and Markdown download links to the bottom of every post here on Bugsplat. Scroll down to the bottom to see them, the scroll back up here to read how it works.

Keeping a Programming Journal with Marginalia

In addition to writing on this blog, I've been keeping notes for various things on Marginalia, my web-based note taking and journaling app. In my previous post I talked about the why and how of Marginalia itself. In this post I'd like to talk more about what I actually use it for day to day, in particular to keep programming journals.

Update 2013-10-19: Marginalia is shut down and open source on GitHub

Marginalia: A web-based journaling and note taking tool

I'd like to present my new webapp, Marginalia, a web based journaling and note taking tool. Notes are written in Markdown, and there are some simple shortcuts for appending timestamped entries at the end of a note, as well as a few email-based tools for creating and appending to notes. You should check it out. Look below the fold for technical details and the origin story.

Update 2013-10-19: Marginalia is shut down and open source on GitHub

Task-oriented Dotfiles

Recently I sat down and reorganized my dotfiles around the tasks that I do day-to-day. For example, I have bits of configuration related to ledger and some other bits related to Ruby development. In my previous dotfile setup, this stuff was all mixed together in the same files. I had started to use site-specific profiles (i.e. home vs work), but that led to a lot of copied config splattered all over. I wanted my dotfiles more organized and modifiable than that.

ProcLaunch v1.2

Just a few bug fixes this time:

- When you send proclaunch

SIGHUP, it will send all of the profiles their respective stop signals and then wait for them to shut down. You can tell proclaunch to stop without waiting by sendingSIGHUPagain. - You can pass the

--log-pathcommand line option to change where proclaunch writes it's log. By default this is$profile_dir/error.log

ProcLaunch Improvements and v1.1

ProcLaunch has learned a bunch of new things lately. I've fixed a few bugs and implemented a few new features, including:

- A

--log-leveloption, so you can set a level other thanDEBUG - Kill profiles that don't exist

- Instead of killing the process and restarting, proclaunch can send it a signal using the

reloadfile - Instead of always sending

SIGTERM, thestop_signalfile can contain the name of a signal to send when proclaunch wants to stop a profile - Pid files are properly cleaned up after processes that don't do it themselves

- You won't get two copies of proclaunch if one is already running as root

ProcLaunch v1.0

I kind of started [ProcLaunch][] as a lark. Can I actually do better than the existing user space process managers? It turns out that at least a few people think so. I've gotten a ton of great feedback from thijsterlouw, who actually filed bug reports and helped me work through a bunch of issues. ProcLaunch even has some tests now!

Perl with a Lisp

Browsing around on hacker news one day, I came across a link to a paper entitled "A micro-manual for Lisp - Not the whole truth" by John McCarthy, the self-styled discoverer of Lisp. One commentor stated that they have been using this paper for awhile as a code kata, implementing it several times, each in a different language, in order to better learn that language. The other day I was pretty bored and decided that maybe doing that too would be a good way to learn something and aleviate said boredom. My first implementation is in perl, mostly because I don't want to have to learn a new language and lisp at the same time. The basic start is after the jump.

Managing Your Processes with ProcLaunch.

Edit 2010-08-08: ProcLaunch now has a CPAN-compatible install process. See below for details.

I finally got the chance to work some more on proclaunch, my implementation of a user space process manager, like runit or mongrel or god. I wrote up a big overview of the currently available options [previously][12], but in summary: all of the existing options suck. They're either hard to setup, have memory leaks, have a weird configuration language, or are just plain strange. The only viable option was procer, and even that was just sort of a tech demo put together for the Mongrel2 manual.

That's why I started putting together proclaunch. I need some of the features of runit, namely automatic restart, with none of the wackyness, and I wanted it to be easy to automatically configure. I also wanted it to be standalone so I wouldn't have to install a pre-alpha version of Mongrel2 just to manage my own processes.

Blog Generator Updates

I've made some small changes to the way bugsplat.info is generated. First, I refactored publish.pl quite extensively. Instead of being a huge mess of spaghetti-perl, it's nicely factored out into functions, each one doing as little as possible. It got a little longer, but I think it's worth the tradeoff in readability.

Daemons are Our Picky, Temperamental Friends

Modern web applications are complicated beasts. They've got database processes, web serving processes, and various tiers of actual application services. The first two generally take care of themselves. PostgreSQL, MySQL, Apache, Nginx, lighttpd, they all have well-understood ways of starting and keeping themselves up and running.

But what do you do if you have a bunch of processes that you need to keep running that aren't well understood? What if they're well-understood to crash once in a while and you don't want to have to babysit them? You need a user space process manager. Zed Shaw seems to have coined this term specifically for the Mongrel2 manual, and it describes pretty accurately what you'd want: some user-space program running above init that can launch your processes and start them again if they stop. Dropping privilages would be nice. Oh, and it'd be cool if it were sysadmin-friendly. Oh, and if it could automatically detect code changes and restart that'd be nifty too.

Data Mining "Lost" Tweets

Note: this article uses the Twitter V1 API which has been shut down. The concepts still apply but you'll need to map them to the new V2 API.

As some of you might know, Twitter provides a streaming API that pumps all of the tweets for a given search to you as they happen. There are other stream variants, including a sample feed (a small percentage of all tweets), "Gardenhose", which is a stastically sound sample, and "Firehose", which is every single tweet. All of them. Not actually all that useful, since you have to have some pretty beefy hardware and a really nice connection to keep up. The filtered stream is much more interesting if you have a target in mind. Since there was such a hubbub about "Lost" a few weeks ago I figured I would gather relevant tweets and see what there was to see. In this first part I'll cover capturing tweets and doing a little basic analysis, and in the second part I'll go over some deeper analysis, including some pretty graphs!

Iterating Elements in boost::tuple, template style

In my day job I use a mix of perl and C++, along with awk, sed, and various little languages. In our C++ we use a lot of boost, especially simple things like the date_time libraries and tuple. Tuple is a neat little thing, sort of like std::pair except it lets you have up to 10 elements of arbitrary type instead of just the two. One of the major things that it gives you is a correct operator<, which gives you the ability to use it as a key in std::map. Very handy. One tricky thing, though, is generically iterating over every element in the tuple. What then?

Everyone Needs Goals

Creating actionable information out of raw data is sometimes pretty simple, requiring only small changes. Of the few feature requests that I've received for Calorific, most (all) of them have been for goals. Always listen to the audience, that's my motto!

Building Battle Bots with Clojure

Once in a while at [Rentrak][] we have programming competitions, where anyone who wants to, including sysadmins and DBAs, can submit an entry for whatever the problem is. The previous contest involved writing a poker bot which had to play two-card hold'em, while others have involved problems similar in spirit to the Netflix Prize. This time we chose to build virtual robots that shoot each other with virtual cannons and go virtual boom! We'll be using [RealTimeBattle][], which is a piece of software designed specifically to facilitate contests of this sort. It's kind of like those other robot-battle systems, except instead of requiring you to write your robot in their own arbitrary, broken, horrible language, this lets you write your bot in any language that can talk on stdin and stdout.

Actionable Information

Let's pretend, just for a second, that you want to make some money on the stock market. Sounds easy, right? Buy low, sell high, yadda yadda blah blah blah. Except, how do you know when to buy and when to sell? Not so easy. Being a nerd, you want to teach your computer how to do this for you. But where to start? I discovered a few months ago that there are services out there that will sell you a data feed that literally blasts every single anonymous transaction that happens on any market in the US in real time. They'll also sell you access to a historical feed that provides the same tick-level information going back for several years.

Moose vs Mouse and OOP in Perl

After using Calorific for a month two things have become very clear. First, I need to eat less. Holy crap do I need to eat less. I went on to SparkPeople just to get an idea of what I should be eating, and it told me between 2300 and 2680 kcal. I haven't implemented averaging yet, but a little grep/awk magic tells me I'm averaging 2793 kcal per day. This is too much. So. One thing to work on.

Calorific, a Simple Calorie Tracker

I'm a nerd. I write software for a living. I spend a lot of my day either sitting in a chair in front of a computer, or laying on my couch using my laptop. I'm not what you'd call... athletic. I did start lifting weights about six months ago but that's really just led to gaining more weight, not losing it. A few years back I started counting calories and I lost some weight, and then stopped counting calories and gained it all back. Time to change that.